publications and conferences

publications by categories in reversed chronological order.

2024

-

Academic Language Use in Middle School Informational WritingCherish M Sarmiento, Adrea Truckenmiller, Eunsoo Cho, and 1 more authorPsychology in the Schools (in review) 2024

Academic Language Use in Middle School Informational WritingCherish M Sarmiento, Adrea Truckenmiller, Eunsoo Cho, and 1 more authorPsychology in the Schools (in review) 2024Background: Understanding students’ use of academic language within informational writing is a key component of writing development throughout schooling. Additional research on the academic language variables that are most predictive of quality academic writing would be helpful to guide more targeted instruction. Aims: We evaluate Grade 5 and Grade 8 students’ writing (in the genre of descriptive informational writing in response to text) to determine which metrics may be the most indicative of academic language and what role they play in dimensions of writing ability. The roles include contributions to the dimensions of writing quantity, quality, and level of narrativity Sample: Our sample consists of informational writing samples from 285 students from Grade 5 (n = 175) and Grade 8 (n = 110) in a Midwestern State in the United States. Methods: An individual differences design was used to compare several word and sentence level features to determine the degree to which the features signal academic language at the beginning and end of middle school. Results: Results suggest that frequency of longer words may be the most useful measure of academic language in informational writing and that several sentence-level metrics may challenge our current conceptualization of quality writing. Conclusion: Results provide promising avenues for the assessment of malleable aspects of academic language in writing

-

Extending a Pretrained Language Model (BERT) using an Ontological Perspective to Classify Students’ Scientific Expertise Level from Written ResponsesHeqiao Wang, Kevin Haudek, Amanda Manzanares, and 2 more authorsInternational Journal of Artificial Intelligence in Education (in review) 2024

Extending a Pretrained Language Model (BERT) using an Ontological Perspective to Classify Students’ Scientific Expertise Level from Written ResponsesHeqiao Wang, Kevin Haudek, Amanda Manzanares, and 2 more authorsInternational Journal of Artificial Intelligence in Education (in review) 2024The complex and interdisciplinary nature of scientific concepts presents formidable challenges for students in developing their knowledge-in-use skills. The utilization of computerized analysis for evaluating students’ contextualized constructed responses offers a potential avenue for educators to develop personalized and scalable interventions, thus supporting the teaching and learning of science consistent with contemporary calls. While prior research in artificial intelligence has demonstrated the effectiveness of algorithms, including Bidirectional Encoder Representations from Transformers (BERT), in tasks like automated classifications of constructed responses, these efforts have predominantly leaned towards text-level feature, often overlooking the exploration of conceptual ideas embedded in students’ responses from a cognitive perspective. Despite BERT’s performance in downstream tasks, challenges may arise in domain-specific tasks, particularly in establishing knowledge connections between specialized and open domains. These challenges become pronounced in small-scale and imbalanced educational datasets, where the available information for fine-tuning is frequently inadequate to capture task-specific nuances and contextual details. The primary objective of the present study is to investigate the effectiveness of the established industrial standard pretrained language model BERT, when integrated with an ontological framework aligned with our science assessment, in classifying students’ expertise levels in scientific explanation. Our findings indicate that while pretrained language models such as BERT contribute to enhanced performance in language-related tasks within educational contexts, the incorporation of identifying domain-specific terms and extracting and substituting with their associated sibling terms in sentences through ontology-based systems can further improve classification model performance. Further, we qualitatively examined student responses and found that, as expected, the ontology framework identified and substituted key domain specific terms in student responses that led to more accurate predictive scores. The study explores the practical implementation of ontology in classrooms to facilitate formative assessment and formulate instructional strategies.

-

FEW Questions, Many Answers: Using Machine Learning Analysis to Assess How Students Connect Food-Energy-Water ConceptsEmily Royse, Amanda Manzanares, Heqiao Wang, and 10 more authorsNature (in review) 2024

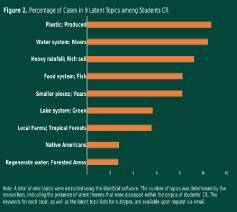

FEW Questions, Many Answers: Using Machine Learning Analysis to Assess How Students Connect Food-Energy-Water ConceptsEmily Royse, Amanda Manzanares, Heqiao Wang, and 10 more authorsNature (in review) 2024There is growing support and interest in postsecondary interdisciplinary environmental education which integrate concepts and disciplines in addition to providing varied perspectives. There is a need to assess student learning in these programs as well as rigorous evaluation of educational practices,especially of complex synthesis concepts. This work tests a text classification machine learning model as a tool to assess student systems thinking capabilities using two questions anchored by the Food-Energy- Water (FEW) Nexus phenomena by answering two questions (1) Can machine learning models be used to identify instructor-determined important concepts in student responses? (2) What do college students know about the interconnections between food, energy and water, and how have students assimilated systems thinking into their constructed responses about FEW? Reported here are a broad range of model performances across 26 text classification models associated with two different assessment items, with model accuracy ranging from 0.755 to 0.992. Expert-like responses were infrequent in our dataset compared to responses providing simpler, incomplete explanations of the systems presented in the question. For those students moving from describing individual effects to multiple effects, their reasoning about the mechanism behind the system indicates advanced systems thinking ability. Specifically, students exhibit higher expertise for explaining changing water usage than discussing tradeoffs for such changing usage. This research represents one of the first attempts to assess the links between foundational, discipline-specific concepts and systems thinking ability. These text classification approaches to scoring student FEW Nexus Constructed Responses (CR) indicate how these approaches can be used, in addition to several future research priorities for interdisciplinary, practice-based education research. Development of further complex question items using machine learning would allow evaluation of the relationship between foundational concept understanding and integration of those concepts as well as more nuanced understanding of student comprehension of complex interdisciplinary concepts.

-

CohBERT: Enhancing Language Representation through Coh-Metrix Linguistic Features for Analysis of Student Written ResponsesHeqiao Wang, and Xiaohu LuComputers and Education. In preparation 2024

CohBERT: Enhancing Language Representation through Coh-Metrix Linguistic Features for Analysis of Student Written ResponsesHeqiao Wang, and Xiaohu LuComputers and Education. In preparation 2024 -

A Synthesis of Research on Expository Science Text ComprehensionEunsoo Cho, Cherish Sarmiento, Heqiao Wang, and 1 more authorIn preparation 2024

A Synthesis of Research on Expository Science Text ComprehensionEunsoo Cho, Cherish Sarmiento, Heqiao Wang, and 1 more authorIn preparation 2024 -

Genre-specific writing motivation in late elementary-age children: Psychometric properties of the Situated Writing Activity and Motivation Scale.Gary Troia, Frank Lawrence, and Heqiao WangInternational Electronic Journal of Elementary Education (in review) 2024

Genre-specific writing motivation in late elementary-age children: Psychometric properties of the Situated Writing Activity and Motivation Scale.Gary Troia, Frank Lawrence, and Heqiao WangInternational Electronic Journal of Elementary Education (in review) 2024This study evaluated the latent structure, reliability, and criterion validity of the Situated Writing Activity and Motivation Scale (SWAMS) and determined if writing motivation measured by the SWAMS was different across narrative, informative, and persuasive genres using a sample of 397 fourth and fifth graders. Additionally, differences in writing motivation and essay quality attributable to sample sociodemographic characteristics were examined. Overall, the narrative, informative, and persuasive subscales of the SWAMS exhibited acceptable psychometric properties, though there were issues related to unidimensional model fit and item bias. A significant amount of unique variance in narrative, informative, and persuasive writing quality was explained by motivation for writing in each genre. Although we did not observe genre-based differences in overall motivation to write using summative scores for each subscale, there were small but significant differences between narrative and informative writing for the discrete motivational constructs of self-efficacy, outcome expectations, and task interest and value. Consistent differences favoring girls and students without special needs were observed on SWAMS scores, apparently linked with observed differences in writing performance. Limitations of the study and suggested uses of the SWAMS are discussed.

2023

-

How Students’ Writing Motivation, Teachers’ Personal and Professional Attributes, and Their Writing Instruction Impact Student Writing Achievement: A Two-Level Hierarchical Linear Modeling StudyHeqiao Wang, and Gary A TroiaFrontiers in Psychology 2023

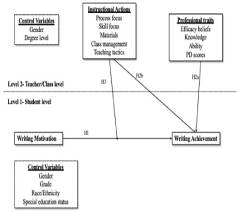

How Students’ Writing Motivation, Teachers’ Personal and Professional Attributes, and Their Writing Instruction Impact Student Writing Achievement: A Two-Level Hierarchical Linear Modeling StudyHeqiao Wang, and Gary A TroiaFrontiers in Psychology 2023Student motivation to write is a pivotal factor influencing their writing achievement. However, individual motivation to write is not independent of the learning environment. It also is crucial for teachers to develop their own efficacy, knowledge, and ability in writing and writing instruction to help them utilize effective instructional methods that stimulate students’ motivation to write and further promote their writing achievement. Given these considerations, we utilized a two-level hierarchical linear model to examine the relationships among student motivation, teacher personal and professional traits, teacher writing instruction, and writing achievement at student and teacher levels. Our analysis of the dataset, which included 346 fourth and fifth graders nested within 41 classrooms, found that motivation had a positive predictive effect on writing ability at both student and teacher levels. Moreover, female students, fifth graders, and typically achieving students demonstrated higher writing achievement than their counterparts. While there were no significant effects of teacher efficacy, knowledge, ability, or professional development on student writing achievement, we observed that higher frequency of classroom management practices during writing instruction had a significant negative effect on student writing achievement. Our full model revealed that the relationship between student motivation and achievement was negatively moderated by teachers’ increased use of instructional practices related to process features and using writing instruction materials, but positively moderated by increased use of varied teaching tactics. Overall, our findings emphasize the importance of contextual factors in understanding the complexity of student writing achievement and draw attention to the need for effective instructional practices to support students’ writing development.

@article{wang2023students, title = {How Students' Writing Motivation, Teachers' Personal and Professional Attributes, and Their Writing Instruction Impact Student Writing Achievement{:} A Two-Level Hierarchical Linear Modeling Study}, author = {Wang, Heqiao and Troia, Gary A}, journal = {Frontiers in Psychology}, volume = {14}, pages = {1213929}, year = {2023}, publisher = {Frontiers}, } -

Is ChatGPT a Threat to Formative Assessment in College-Level Science? An Analysis of Linguistic and Content-Level Features to Classify Response TypesHeqiao Wang, Tingting Li, Kevin Haudek, and 5 more authorsIn International Conference on Artificial Intelligence in Education Technology 2023

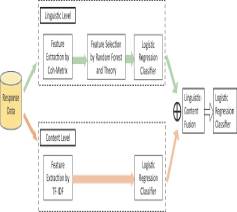

Is ChatGPT a Threat to Formative Assessment in College-Level Science? An Analysis of Linguistic and Content-Level Features to Classify Response TypesHeqiao Wang, Tingting Li, Kevin Haudek, and 5 more authorsIn International Conference on Artificial Intelligence in Education Technology 2023The impact of OpenAI’s ChatGPT on education has led to a reexamination of traditional pedagogical methods and assessments. However, ChatGPT’s performance capabilities on a wide range of assessments remain to be determined. This study aims to classify ChatGPT-generated and student constructed responses to a college-level environmental science question and explore the linguistic- and content-level features that can be used to address the differential use of language. Coh-Metrix textual analytic tool was implemented to identify and extract linguistic and textual feature. Then we employed random forest feature selection method to determine the best representative and nonredundant text-based features. We also employed TF-IDF metrics to represent the content of written responses. The true performance of classification models for the responses was evaluated and compared in three scenarios: (a) using content-level features alone, (b) using linguistic-level features alone, (c) using the combination of two. The results demonstrated that the accuracy, specificity, sensitivity, and F1-score all increased when we used the combination of two-level features. The results of this study hold promise to provide valuable insights for instructors to detect student responses and integrate ChatGPT into their course development. This study also highlights the significance of linguistic- and content-level features in AI education research.

@inproceedings{wang2023chatgpt, title = {Is ChatGPT a Threat to Formative Assessment in College-Level Science? An Analysis of Linguistic and Content-Level Features to Classify Response Types}, author = {Wang, Heqiao and Li, Tingting and Haudek, Kevin and Royse, Emily A and Manzanares, Mandy and Adams, Sol and Horne, Lydia and Romulo, Chelsie}, booktitle = {International Conference on Artificial Intelligence in Education Technology}, pages = {171--185}, year = {2023}, organization = {Springer}, } -

Writing Quality Predictive Modeling: Integrating Register-Related FactorsHeqiao Wang, and Gary A TroiaWritten Communication 2023

Writing Quality Predictive Modeling: Integrating Register-Related FactorsHeqiao Wang, and Gary A TroiaWritten Communication 2023The primary purpose of this study is to investigate the degree to which register knowledge, register-specific motivation, and diverse linguistic features are predictive of human judgment of writing quality in three registers—narrative, informative, and opinion. The secondary purpose is to compare the evaluation metrics of register-partitioned automated writing evaluation models in three conditions: (1) register-related factors alone, (2) linguistic features alone, and (3) the combination of these two. A total of 1006 essays (n = 327, 342, and 337 for informative, narrative, and opinion, respectively) written by 92 fourth- and fifth-graders were examined. A series of hierarchical linear regression analyses controlling for the effects of demographics were conducted to select the most useful features to capture text quality, scored by humans, in the three registers. These features were in turn entered into automated writing evaluation predictive models with tuning of the parameters in a tenfold cross-validation procedure. The average validity coefficients (i.e., quadratic-weighed kappa, Pearson correlation r, standardized mean score difference, score deviation analysis) were computed. The results demonstrate that (1) diverse feature sets are utilized to predict quality in the three registers, and (2) the combination of register-related factors and linguistic features increases the accuracy and validity of all human and automated scoring models, especially for the registers of informative and opinion writing. The findings from this study suggest that students’ register knowledge and register-specific motivation add additional predictive information when evaluating writing quality across registers beyond that afforded by linguistic features of the paper itself, whether using human scoring or automated evaluation. These findings have practical implications for educational practitioners and scholars in that they can help strengthen consideration of register-specific writing skills and cognitive and motivational forces that are essential components of effective writing instruction and assessment.

@article{wang2023writing, title = {Writing Quality Predictive Modeling{:} Integrating Register-Related Factors}, author = {Wang, Heqiao and Troia, Gary A}, journal = {Written Communication}, volume = {40}, number = {4}, pages = {1070--1112}, year = {2023}, publisher = {SAGE Publications Sage CA{:} Los Angeles, CA}, } -

Exploratory Text Analysis of Student Constructed Responses to NGCI Assessment ItemsHeqiao Wang, and C. Kevin HaudekIn CREATE for STEM Conference, Michigan State University, East Lansing, MI 2023

Exploratory Text Analysis of Student Constructed Responses to NGCI Assessment ItemsHeqiao Wang, and C. Kevin HaudekIn CREATE for STEM Conference, Michigan State University, East Lansing, MI 2023Assessing students’ scientific writing skills presents a significant challenge in science education, primarily due to the subjectivity and time-consuming nature of the process. To address this challenge, we designed a novel approach that leverages both linguistic and content measures to automatically evaluate students’ scientific writing skills. We conducted exploratory data analysis to identify relevant features that can be incorporated into machine-learning algorithms to enhance model accuracy and reliability. Specifically, we utilized four assessment items in undergraduate environmental science on Food-Energy-Water (FEW)nexus, all of which pertained to cause and effect science assessment. To ensure comprehensive evaluation of response features, we categorized the sub-questions of the cause and effect task into six subtypes, namely effect, tracing, system flows, predict, cause, and devise.Our primary objective is to compare how varying subtypes affect students’ writing about phenomena in FEW nexus. A mixed-method approach was employed to analyze the data. In the quantitative analysis, we extracted 29 theoretically based features from the Coh-Metrix textual analysis tool to identify differences in language use across the four subtypes. In the qualitative analysis, we employed latent topic modeling to investigate the content of students’ responses and determine whether the vocabularies were consistent with the underlying topic content within each subtype. Overall, our approach offers a comprehensive evaluation of students’ scientific writing, which holds promise to inform future automated evaluation of science assessments.

-

Development of a Next Generation Concept Inventory with AI-based Evaluation for College Environmental ProgramsKevin Haudek, Chelsie Romulo, Steve Anderson, and 8 more authorsIn AERA Annual Meeting, Chicago, IL: American Educational Research Association 2023

Development of a Next Generation Concept Inventory with AI-based Evaluation for College Environmental ProgramsKevin Haudek, Chelsie Romulo, Steve Anderson, and 8 more authorsIn AERA Annual Meeting, Chicago, IL: American Educational Research Association 2023Interdisciplinary environmental programs (EPs) are increasingly popular in U.S.universities, but lack consensus about core concepts and assessments aligned to these concepts. To address this, we are developing assessments for evaluating undergraduates’ foundational knowledge in EPs and their ability to use complex systems-level concepts within the context of the Food-Energy-Water (FEW) Nexus. Specifically, we have applied a framework for developing and evaluating constructed response (CR) questions in science to create a Next Generation Concept Inventory in EPs, along with machine learning (ML) text scoring models. Building from previous research, we identified four key activities for assessment prompts: Explaining connections between FEW, Identifying sources of FEW, Cause & Effect of FEW usage and Tradeoffs. We developed three sets of CR items to these four activities using different phenomena as contexts. To pilot our initial items, we collected responses from over 700 EP undergraduates across seven institutions to begin coding rubric development. We developed a series of analytic coding rubrics to identify students’ scientific and informal ideas in CRs and how students connect scientific ideas related to FEW. Human raters have demonstrated moderate to good levels of agreement on CRs (0.72-0.85) using these rubrics. We have used a small set of coded responses to begin development of supervised ML text classification models. Overall, these ML models have acceptable accuracy (M= .89, SD= .08) but exhibit a wide range of other model metrics. This underscores the challenges of using ML based evaluation for complex and interdisciplinary assessments.

2022

-

Latent Profiles of Writing-related Skills, Knowledge, and Motivation for Elementary Students and Their Relations to Writing Performance Across Multiple GenresGary A Troia, Heqiao Wang, and Frank R LawrenceContemporary Educational Psychology 2022

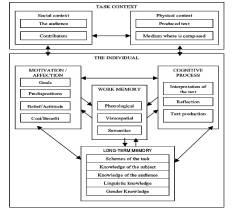

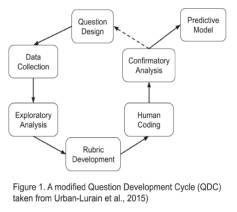

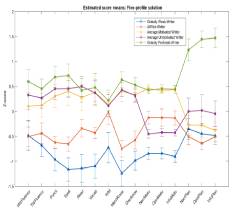

Latent Profiles of Writing-related Skills, Knowledge, and Motivation for Elementary Students and Their Relations to Writing Performance Across Multiple GenresGary A Troia, Heqiao Wang, and Frank R LawrenceContemporary Educational Psychology 2022Our goal in this study is to expand the limited research on writer profile using the advantageous model-based approach of latent profile analysis and independent tasks to evaluate aspects of individual knowledge, motivation, and cognitive processes that align with Hayes’ (1996) writing framework, which has received empirical support. We address three research questions. First, what latent profile are observed for late elementary writers using measures aligned with an empirically validated model of writing? Second, do student sociodemographic characteristics—namely grade, gender, race, English learner status, and special education status—influence latent profile membership? Third, how does student performance on narrative, opinion, and informative writing tasks, determined by quality of writing, vary by latent profile? A five-profile model had the best fit statistics and classified student writers as Globally Weak, At Risk, Average Motivated, Average Unmotivated, and Globally Proficient. Overall, fifth graders, female students, White students, native English speakers, and students without disabilities had greater odds of being in the Globally Proficient group of writers. For all three genres, other latent profile were significantly inversely related to the average quality of papers written by students who were classified as Globally Proficient; however, the Globally Weak and At Risk writers were not significantly different in their writing quality, and the Average Motivated and Average Unmotivated writers did not significantly differ from each other with respect to quality. These findings indicate upper elementary students exhibit distinct patterns of writing-related strengths and weaknesses that necessitate comprehensive yet differentiated instruction to address skills, knowledge, and motivation to yield desirable outcomes.

@article{troia2022latent, title = {Latent Profiles of Writing-related Skills, Knowledge, and Motivation for Elementary Students and Their Relations to Writing Performance Across Multiple Genres}, author = {Troia, Gary A and Wang, Heqiao and Lawrence, Frank R}, journal = {Contemporary Educational Psychology}, volume = {71}, pages = {102100}, year = {2022}, publisher = {Elsevier}, } - Teaching Effective Revising Strategy to Improve Writing Performance for Children With Learning Disabilities in the United States: A ReviewMei Shen, and Heqiao WangModern Special Education (Chinese) 2022

Writing is an important part of a student’s academic achievement. For children with learning disabilities, writing is critical, complex, and challenging as well. Previous research studies have shown that writing performance of most children with learning disabilities is significantly lower compared to their typically developing peers. Besides, they seldom engage in effective revising behaviors either. Researchers in the United States have conducted revising strategy instruction for those struggling writers, and the students’ overall writing performance has shown to improve significantly after receiving the strategy instruction. Being informed of the writing research in the United States will enable us to deepen understanding of the effectiveness of revising strategy instruction on the writing performance of students with learning disabilities, which will further promote writing research on conducting appropriate revising instruction for struggling writers in China.

@article{wang2022revising, title = {Teaching Effective Revising Strategy to Improve Writing Performance for Children With Learning Disabilities in the United States{:} A Review}, author = {Shen, Mei and Wang, Heqiao}, journal = {Modern Special Education (Chinese)}, volume = {1}, number = {G769}, pages = {77-79}, year = {2022}, publisher = {Jiangsu Education Press and Periodicals Headquarters, Jiangsu, China}, } -

Prospects and Challenges of Using MTSS for Children with Written Language Disorders.Amna Agha, Cherish Sarmiento, Katie Valentine, and 3 more authorsunpublished 2022

Prospects and Challenges of Using MTSS for Children with Written Language Disorders.Amna Agha, Cherish Sarmiento, Katie Valentine, and 3 more authorsunpublished 2022Given the recent advocacy for using a science-based lens in conducting educational research and practice and developing educational policy, multi-tiered systems of support (MTSS) is designed to provide a systematic framework for all target students by adopting evidence-based intervention, monitoring progress, and making instructional decisions with assessment data. The breadth and depth of MTSS implementation across states and nations requires discussions on the landscape of policy guidance and suggestions behind. However, so far there are few studies investigating the potential factors that cause the variability of implementing MTSS. Less is known regarding the academic intervention models for children at risk for or identified with language disorder. The primary purpose of this article was to synthesize the existing MTSS policy recommendations at the federal, state, and local levels that may address current implementation barriers. The secondary objective was to demonstrate practical guidance from some representative case examples from the U.S. and other countries. We highlight diverse factors at different levels, such as the availability (or lack thereof) of: financial support, teachers’ preparedness for instructing target children, cooperation between practitioners and researchers, etc., that may impact implementation of MTSS. More importantly, the paucity of developing MTSS framework for students with writing difficulties was also identified. Implications and future directions for MTSS implementation were discussed.